Contents

- OpenStack configuration overview

- Common configurations

- Application Catalog service

- Bare Metal service

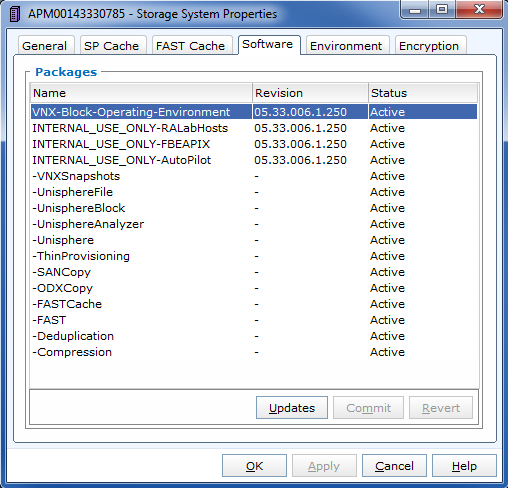

- Block Storage service

- Introduction to the Block Storage service

- Volume drivers

- Backup drivers

- Block Storage schedulers

- Log files used by Block Storage

- Fibre Channel Zone Manager

- Nested quotas

- Volume encryption supported by the key manager

- Additional options

- Block Storage service sample configuration files

- New, updated, and deprecated options in Newton for Block Storage

- Clustering service

- Compute service

- The full set of available options

- New, updated, and deprecated options in Newton for Compute

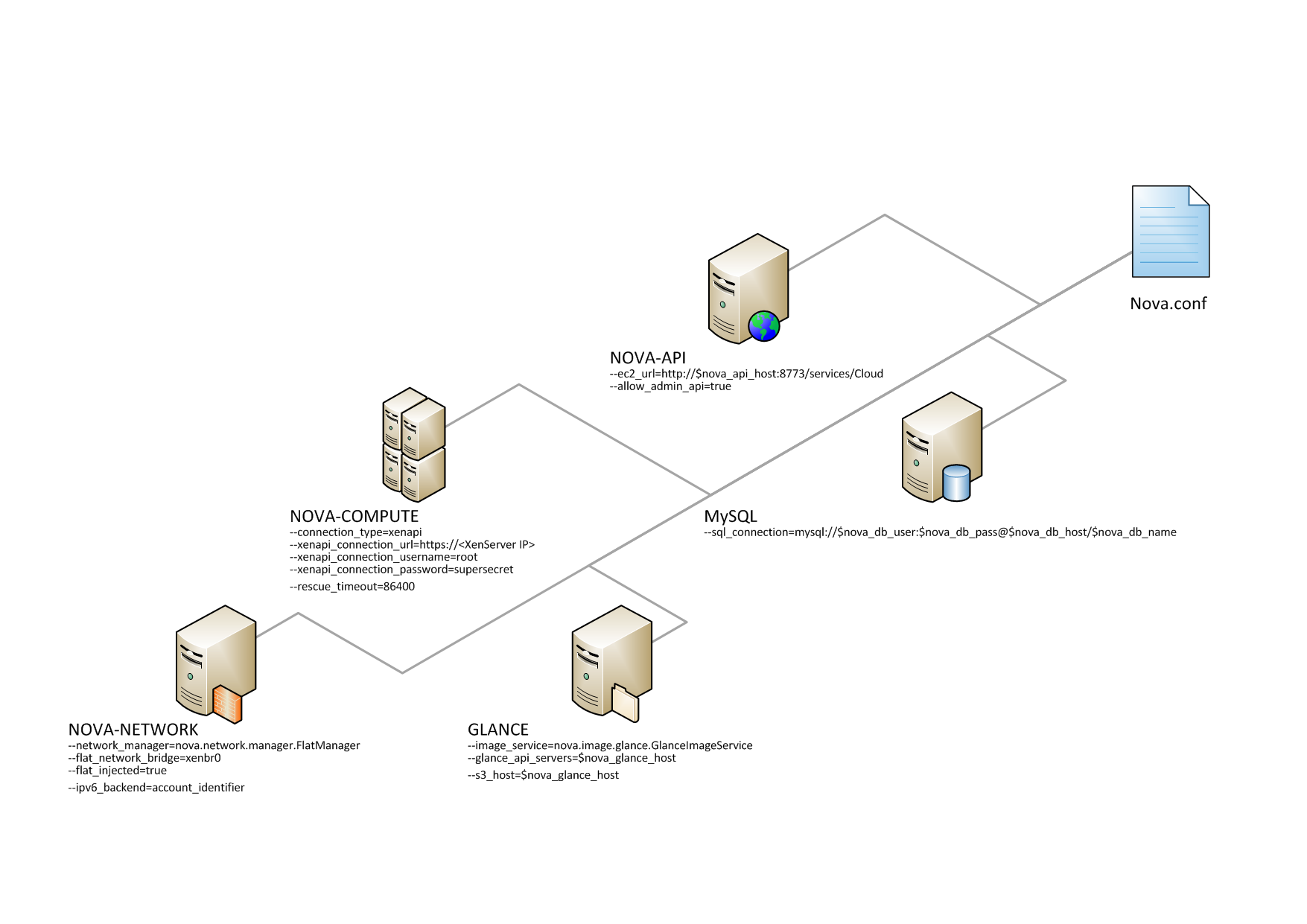

- Overview of nova.conf

- Compute API configuration

- Configure resize

- Database configuration

- Fibre Channel support in Compute

- iSCSI interface and offload support in Compute

- Hypervisors

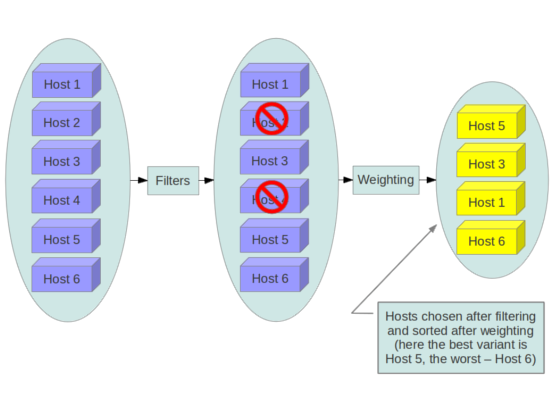

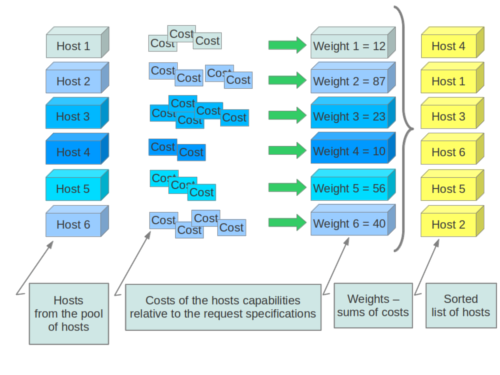

- Compute schedulers

- Cells

- Conductor

- Compute log files

- Example nova.conf configuration files

- Compute service sample configuration files

- Dashboard

- Data Processing service

- Database service

- Identity service

- Image service

- Message service

- Networking service

- Networking configuration options

- Firewall-as-a-Service configuration options

- Load-Balancer-as-a-Service configuration options

- VPN-as-a-Service configuration options

- Log files used by Networking

- Networking sample configuration files

- Networking advanced services configuration files

- New, updated, and deprecated options in Newton for Networking

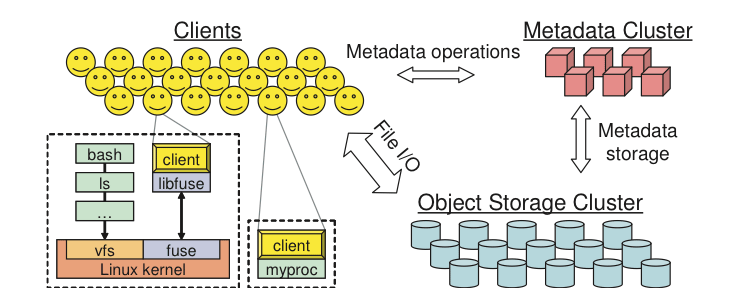

- Object Storage service

- Introduction to Object Storage

- Object Storage general service configuration

- Object server configuration

- Object expirer configuration

- Container server configuration

- Container sync realms configuration

- Container reconciler configuration

- Account server configuration

- Proxy server configuration

- Proxy server memcache configuration

- Rsyncd configuration

- Configure Object Storage features

- Configure Object Storage with the S3 API

- Endpoint listing middleware

- Object storage log files

- New, updated, and deprecated options in Newton for OpenStack Object Storage

- Orchestration service

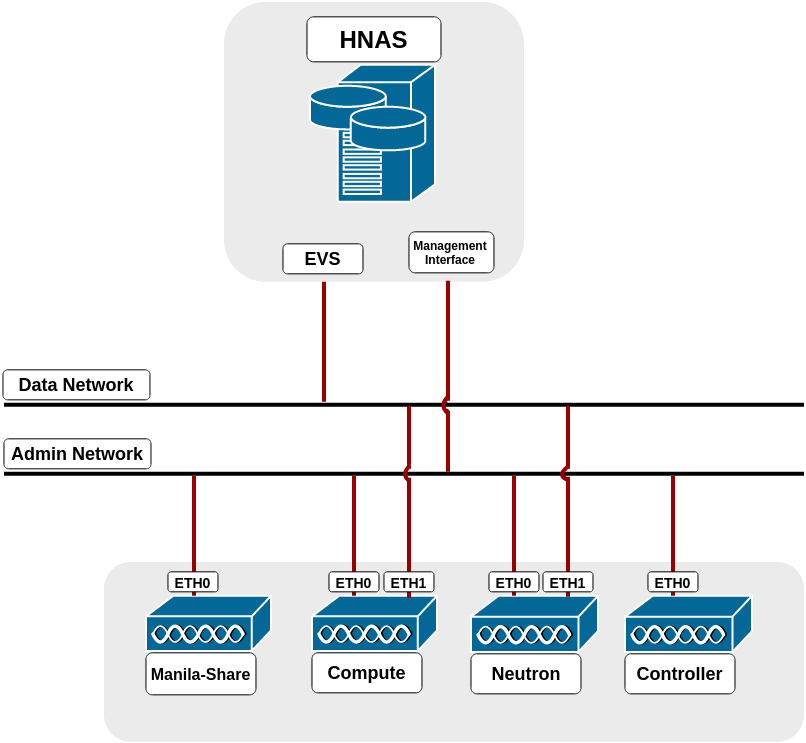

- Shared File Systems service

- Telemetry service

OpenStack Configuration Reference¶

Abstract¶

This document is for system administrators who want to look up configuration options. It contains lists of configuration options available with OpenStack and uses auto-generation to generate options and the descriptions from the code for each project. It includes sample configuration files.

Contents¶

OpenStack configuration overview¶

Conventions¶

The OpenStack documentation uses several typesetting conventions.

Notices¶

Notices take these forms:

Note

A comment with additional information that explains a part of the text.

Important

Something you must be aware of before proceeding.

Tip

An extra but helpful piece of practical advice.

Caution

Helpful information that prevents the user from making mistakes.

Warning

Critical information about the risk of data loss or security issues.

Command prompts¶

$ command

Any user, including the root user, can run commands that are

prefixed with the $ prompt.

# command

The root user must run commands that are prefixed with the #

prompt. You can also prefix these commands with the sudo

command, if available, to run them.

Configuration file format¶

OpenStack uses the INI file format for configuration

files. An INI file is a simple text file that specifies options as

key=value pairs, grouped into sections.

The DEFAULT section contains most of the configuration options.

Lines starting with a hash sign (#) are comment lines.

For example:

[DEFAULT]

# Print debugging output (set logging level to DEBUG instead

# of default WARNING level). (boolean value)

debug = true

[database]

# The SQLAlchemy connection string used to connect to the

# database (string value)

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

Options can have different types for values.

The comments in the sample config files always mention these and the

tables mention the Opt value as first item like (BoolOpt) Toggle....

The following types are used by OpenStack:

- boolean value (

BoolOpt) Enables or disables an option. The allowed values are

trueandfalse.# Enable the experimental use of database reconnect on # connection lost (boolean value) use_db_reconnect = false

- floating point value (

FloatOpt) A floating point number like

0.25or1000.# Sleep time in seconds for polling an ongoing async task # (floating point value) task_poll_interval = 0.5

- integer value (

IntOpt) An integer number is a number without fractional components, like

0or42.# The port which the OpenStack Compute service listens on. # (integer value) compute_port = 8774

- IP address (

IPOpt) An IPv4 or IPv6 address.

# Address to bind the server. Useful when selecting a particular network # interface. (ip address value) bind_host = 0.0.0.0

- key-value pairs (

DictOpt) A key-value pairs, also known as a dictionary. The key value pairs are separated by commas and a colon is used to separate key and value. Example:

key1:value1,key2:value2.# Parameter for l2_l3 workflow setup. (dict value) l2_l3_setup_params = data_ip_address:192.168.200.99, \ data_ip_mask:255.255.255.0,data_port:1,gateway:192.168.200.1,ha_port:2

- list value (

ListOpt) Represents values of other types, separated by commas. As an example, the following sets

allowed_rpc_exception_modulesto a list containing the four elementsoslo.messaging.exceptions,nova.exception,cinder.exception, andexceptions:# Modules of exceptions that are permitted to be recreated # upon receiving exception data from an rpc call. (list value) allowed_rpc_exception_modules = oslo.messaging.exceptions,nova.exception

- multi valued (

MultiStrOpt) A multi-valued option is a string value and can be given more than once, all values will be used.

# Driver or drivers to handle sending notifications. (multi valued) notification_driver = nova.openstack.common.notifier.rpc_notifier notification_driver = ceilometer.compute.nova_notifier

- port value (

PortOpt) A TCP/IP port number. Ports can range from 1 to 65535.

# Port to which the UDP socket is bound. (port value) # Minimum value: 1 # Maximum value: 65535 udp_port = 4952

- string value (

StrOpt) Strings can be optionally enclosed with single or double quotes.

# Enables or disables publication of error events. (boolean value) publish_errors = false # The format for an instance that is passed with the log message. # (string value) instance_format = "[instance: %(uuid)s] "

Sections¶

Configuration options are grouped by section. Most configuration files support at least the following sections:

- [DEFAULT]

- Contains most configuration options. If the documentation for a configuration option does not specify its section, assume that it appears in this section.

- [database]

- Configuration options for the database that stores the state of the OpenStack service.

Substitution¶

The configuration file supports variable substitution.

After you set a configuration option, it can be referenced

in later configuration values when you precede it with

a $, like $OPTION.

The following example uses the values of rabbit_host and

rabbit_port to define the value of the rabbit_hosts

option, in this case as controller:5672.

# The RabbitMQ broker address where a single node is used.

# (string value)

rabbit_host = controller

# The RabbitMQ broker port where a single node is used.

# (integer value)

rabbit_port = 5672

# RabbitMQ HA cluster host:port pairs. (list value)

rabbit_hosts = $rabbit_host:$rabbit_port

To avoid substitution, use $$, it is replaced by a single $.

For example, if your LDAP DNS password is $xkj432, specify it, as follows:

ldap_dns_password = $$xkj432

The code uses the Python string.Template.safe_substitute()

method to implement variable substitution.

For more details on how variable substitution is resolved, see

http://docs.python.org/2/library/string.html#template-strings

and PEP 292.

Whitespace¶

To include whitespace in a configuration value, use a quoted string. For example:

ldap_dns_password='a password with spaces'

Define an alternate location for a config file¶

Most services and the *-manage command-line clients load

the configuration file.

To define an alternate location for the configuration file,

pass the --config-file CONFIG_FILE parameter

when you start a service or call a *-manage command.

Changing config at runtime¶

OpenStack Newton introduces the ability to reload (or ‘mutate’) certain configuration options at runtime without a service restart. The following projects support this:

- Compute (nova)

Check individual options to discover if they are mutable.

In practice¶

A common use case is to enable debug logging after a failure. Use the mutable

config option called ‘debug’ to do this (providing log_config_append

has not been set). An admin user may perform the following steps:

- Log onto the compute node.

- Edit the config file (EG

nova.conf) and change ‘debug’ toTrue. - Send a SIGHUP signal to the nova process (For example,

pkill -HUP nova).

A log message will be written out confirming that the option has been changed. If you use a CMS like Ansible, Chef, or Puppet, we recommend scripting these steps through your CMS.

OpenStack is a collection of open source project components that enable setting up cloud services. Each component uses similar configuration techniques and a common framework for INI file options.

This guide pulls together multiple references and configuration options for the following OpenStack components:

- Bare Metal service

- Block Storage service

- Compute service

- Dashboard

- Database service

- Data Processing service

- Identity service

- Image service

- Message service

- Networking service

- Object Storage service

- Orchestration service

- Shared File Systems service

- Telemetry service

Also, OpenStack uses many shared service and libraries, such as database connections and RPC messaging, whose configuration options are described at Common configurations.

Common configurations¶

This chapter describes the common configurations for shared service and libraries.

Authentication and authorization¶

All requests to the API may only be performed by an authenticated agent.

The preferred authentication system is Identity service.

Identity service authentication¶

To authenticate, an agent issues an authentication request to an Identity service endpoint. In response to valid credentials, Identity service responds with an authentication token and a service catalog that contains a list of all services and endpoints available for the given token.

Multiple endpoints may be returned for each OpenStack service according to physical locations and performance/availability characteristics of different deployments.

Normally, Identity service middleware provides the X-Project-Id header

based on the authentication token submitted by the service client.

For this to work, clients must specify a valid authentication token in the

X-Auth-Token header for each request to each OpenStack service API.

The API validates authentication tokens against Identity service before

servicing each request.

No authentication¶

If authentication is not enabled, clients must provide the X-Project-Id

header themselves.

Options¶

Configure the authentication and authorization strategy through these options:

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

auth_strategy = keystone |

(String) This determines the strategy to use for authentication: keystone or noauth2. ‘noauth2’ is designed for testing only, as it does no actual credential checking. ‘noauth2’ provides administrative credentials only if ‘admin’ is specified as the username. |

| Configuration option = Default value | Description |

|---|---|

| [keystone_authtoken] | |

admin_password = None |

(String) Service user password. |

admin_tenant_name = admin |

(String) Service tenant name. |

admin_token = None |

(String) This option is deprecated and may be removed in a future release. Single shared secret with the Keystone configuration used for bootstrapping a Keystone installation, or otherwise bypassing the normal authentication process. This option should not be used, use admin_user and admin_password instead. |

admin_user = None |

(String) Service username. |

auth_admin_prefix = |

(String) Prefix to prepend at the beginning of the path. Deprecated, use identity_uri. |

auth_host = 127.0.0.1 |

(String) Host providing the admin Identity API endpoint. Deprecated, use identity_uri. |

auth_port = 35357 |

(Integer) Port of the admin Identity API endpoint. Deprecated, use identity_uri. |

auth_protocol = https |

(String) Protocol of the admin Identity API endpoint. Deprecated, use identity_uri. |

auth_section = None |

(Unknown) Config Section from which to load plugin specific options |

auth_type = None |

(Unknown) Authentication type to load |

auth_uri = None |

(String) Complete “public” Identity API endpoint. This endpoint should not be an “admin” endpoint, as it should be accessible by all end users. Unauthenticated clients are redirected to this endpoint to authenticate. Although this endpoint should ideally be unversioned, client support in the wild varies. If you’re using a versioned v2 endpoint here, then this should not be the same endpoint the service user utilizes for validating tokens, because normal end users may not be able to reach that endpoint. |

auth_version = None |

(String) API version of the admin Identity API endpoint. |

cache = None |

(String) Request environment key where the Swift cache object is stored. When auth_token middleware is deployed with a Swift cache, use this option to have the middleware share a caching backend with swift. Otherwise, use the memcached_servers option instead. |

cafile = None |

(String) A PEM encoded Certificate Authority to use when verifying HTTPs connections. Defaults to system CAs. |

certfile = None |

(String) Required if identity server requires client certificate |

check_revocations_for_cached = False |

(Boolean) If true, the revocation list will be checked for cached tokens. This requires that PKI tokens are configured on the identity server. |

delay_auth_decision = False |

(Boolean) Do not handle authorization requests within the middleware, but delegate the authorization decision to downstream WSGI components. |

enforce_token_bind = permissive |

(String) Used to control the use and type of token binding. Can be set to: “disabled” to not check token binding. “permissive” (default) to validate binding information if the bind type is of a form known to the server and ignore it if not. “strict” like “permissive” but if the bind type is unknown the token will be rejected. “required” any form of token binding is needed to be allowed. Finally the name of a binding method that must be present in tokens. |

hash_algorithms = md5 |

(List) Hash algorithms to use for hashing PKI tokens. This may be a single algorithm or multiple. The algorithms are those supported by Python standard hashlib.new(). The hashes will be tried in the order given, so put the preferred one first for performance. The result of the first hash will be stored in the cache. This will typically be set to multiple values only while migrating from a less secure algorithm to a more secure one. Once all the old tokens are expired this option should be set to a single value for better performance. |

http_connect_timeout = None |

(Integer) Request timeout value for communicating with Identity API server. |

http_request_max_retries = 3 |

(Integer) How many times are we trying to reconnect when communicating with Identity API Server. |

identity_uri = None |

(String) Complete admin Identity API endpoint. This should specify the unversioned root endpoint e.g. https://localhost:35357/ |

include_service_catalog = True |

(Boolean) (Optional) Indicate whether to set the X-Service-Catalog header. If False, middleware will not ask for service catalog on token validation and will not set the X-Service-Catalog header. |

insecure = False |

(Boolean) Verify HTTPS connections. |

keyfile = None |

(String) Required if identity server requires client certificate |

memcache_pool_conn_get_timeout = 10 |

(Integer) (Optional) Number of seconds that an operation will wait to get a memcached client connection from the pool. |

memcache_pool_dead_retry = 300 |

(Integer) (Optional) Number of seconds memcached server is considered dead before it is tried again. |

memcache_pool_maxsize = 10 |

(Integer) (Optional) Maximum total number of open connections to every memcached server. |

memcache_pool_socket_timeout = 3 |

(Integer) (Optional) Socket timeout in seconds for communicating with a memcached server. |

memcache_pool_unused_timeout = 60 |

(Integer) (Optional) Number of seconds a connection to memcached is held unused in the pool before it is closed. |

memcache_secret_key = None |

(String) (Optional, mandatory if memcache_security_strategy is defined) This string is used for key derivation. |

memcache_security_strategy = None |

(String) (Optional) If defined, indicate whether token data should be authenticated or authenticated and encrypted. If MAC, token data is authenticated (with HMAC) in the cache. If ENCRYPT, token data is encrypted and authenticated in the cache. If the value is not one of these options or empty, auth_token will raise an exception on initialization. |

memcache_use_advanced_pool = False |

(Boolean) (Optional) Use the advanced (eventlet safe) memcached client pool. The advanced pool will only work under python 2.x. |

memcached_servers = None |

(List) Optionally specify a list of memcached server(s) to use for caching. If left undefined, tokens will instead be cached in-process. |

region_name = None |

(String) The region in which the identity server can be found. |

revocation_cache_time = 10 |

(Integer) Determines the frequency at which the list of revoked tokens is retrieved from the Identity service (in seconds). A high number of revocation events combined with a low cache duration may significantly reduce performance. Only valid for PKI tokens. |

signing_dir = None |

(String) Directory used to cache files related to PKI tokens. |

token_cache_time = 300 |

(Integer) In order to prevent excessive effort spent validating tokens, the middleware caches previously-seen tokens for a configurable duration (in seconds). Set to -1 to disable caching completely. |

Cache configurations¶

The cache configuration options allow the deployer to control how an application uses this library.

These options are supported by:

- Compute service

- Identity service

- Message service

- Networking service

- Orchestration service

For a complete list of all available cache configuration options, see olso.cache configuration options.

Database configurations¶

You can configure OpenStack services to use any SQLAlchemy-compatible database.

To ensure that the database schema is current, run the following command:

# SERVICE-manage db sync

To configure the connection string for the database, use the configuration option settings documented in the table Description of database configuration options.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

db_driver = SERVICE.db |

(String) DEPRECATED: The driver to use for database access |

| [database] | |

backend = sqlalchemy |

(String) The back end to use for the database. |

connection = None |

(String) The SQLAlchemy connection string to use to connect to the database. |

connection_debug = 0 |

(Integer) Verbosity of SQL debugging information: 0=None, 100=Everything. |

connection_trace = False |

(Boolean) Add Python stack traces to SQL as comment strings. |

db_inc_retry_interval = True |

(Boolean) If True, increases the interval between retries of a database operation up to db_max_retry_interval. |

db_max_retries = 20 |

(Integer) Maximum retries in case of connection error or deadlock error before error is raised. Set to -1 to specify an infinite retry count. |

db_max_retry_interval = 10 |

(Integer) If db_inc_retry_interval is set, the maximum seconds between retries of a database operation. |

db_retry_interval = 1 |

(Integer) Seconds between retries of a database transaction. |

idle_timeout = 3600 |

(Integer) Timeout before idle SQL connections are reaped. |

max_overflow = 50 |

(Integer) If set, use this value for max_overflow with SQLAlchemy. |

max_pool_size = None |

(Integer) Maximum number of SQL connections to keep open in a pool. |

max_retries = 10 |

(Integer) Maximum number of database connection retries during startup. Set to -1 to specify an infinite retry count. |

min_pool_size = 1 |

(Integer) Minimum number of SQL connections to keep open in a pool. |

mysql_sql_mode = TRADITIONAL |

(String) The SQL mode to be used for MySQL sessions. This option, including the default, overrides any server-set SQL mode. To use whatever SQL mode is set by the server configuration, set this to no value. Example: mysql_sql_mode= |

pool_timeout = None |

(Integer) If set, use this value for pool_timeout with SQLAlchemy. |

retry_interval = 10 |

(Integer) Interval between retries of opening a SQL connection. |

slave_connection = None |

(String) The SQLAlchemy connection string to use to connect to the slave database. |

sqlite_db = oslo.sqlite |

(String) The file name to use with SQLite. |

sqlite_synchronous = True |

(Boolean) If True, SQLite uses synchronous mode. |

use_db_reconnect = False |

(Boolean) Enable the experimental use of database reconnect on connection lost. |

use_tpool = False |

(Boolean) Enable the experimental use of thread pooling for all DB API calls |

Logging configurations¶

You can configure where the service logs events, the level of logging, and log formats.

To customize logging for the service, use the configuration option settings documented in the table Description of common logging configuration options.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

debug = False |

(Boolean) If set to true, the logging level will be set to DEBUG instead of the default INFO level. |

default_log_levels = amqp=WARN, amqplib=WARN, boto=WARN, qpid=WARN, sqlalchemy=WARN, suds=INFO, oslo.messaging=INFO, iso8601=WARN, requests.packages.urllib3.connectionpool=WARN, urllib3.connectionpool=WARN, websocket=WARN, requests.packages.urllib3.util.retry=WARN, urllib3.util.retry=WARN, keystonemiddleware=WARN, routes.middleware=WARN, stevedore=WARN, taskflow=WARN, keystoneauth=WARN, oslo.cache=INFO, dogpile.core.dogpile=INFO |

(List) List of package logging levels in logger=LEVEL pairs. This option is ignored if log_config_append is set. |

fatal_deprecations = False |

(Boolean) Enables or disables fatal status of deprecations. |

fatal_exception_format_errors = False |

(Boolean) Make exception message format errors fatal |

instance_format = "[instance: %(uuid)s] " |

(String) The format for an instance that is passed with the log message. |

instance_uuid_format = "[instance: %(uuid)s] " |

(String) The format for an instance UUID that is passed with the log message. |

log_config_append = None |

(String) The name of a logging configuration file. This file is appended to any existing logging configuration files. For details about logging configuration files, see the Python logging module documentation. Note that when logging configuration files are used then all logging configuration is set in the configuration file and other logging configuration options are ignored (for example, logging_context_format_string). |

log_date_format = %Y-%m-%d %H:%M:%S |

(String) Defines the format string for %%(asctime)s in log records. Default: %(default)s . This option is ignored if log_config_append is set. |

log_dir = None |

(String) (Optional) The base directory used for relative log_file paths. This option is ignored if log_config_append is set. |

log_file = None |

(String) (Optional) Name of log file to send logging output to. If no default is set, logging will go to stderr as defined by use_stderr. This option is ignored if log_config_append is set. |

logging_context_format_string = %(asctime)s.%(msecs)03d %(process)d %(levelname)s %(name)s [%(request_id)s %(user_identity)s] %(instance)s%(message)s |

(String) Format string to use for log messages with context. |

logging_debug_format_suffix = %(funcName)s %(pathname)s:%(lineno)d |

(String) Additional data to append to log message when logging level for the message is DEBUG. |

logging_default_format_string = %(asctime)s.%(msecs)03d %(process)d %(levelname)s %(name)s [-] %(instance)s%(message)s |

(String) Format string to use for log messages when context is undefined. |

logging_exception_prefix = %(asctime)s.%(msecs)03d %(process)d ERROR %(name)s %(instance)s |

(String) Prefix each line of exception output with this format. |

logging_user_identity_format = %(user)s %(tenant)s %(domain)s %(user_domain)s %(project_domain)s |

(String) Defines the format string for %(user_identity)s that is used in logging_context_format_string. |

publish_errors = False |

(Boolean) Enables or disables publication of error events. |

syslog_log_facility = LOG_USER |

(String) Syslog facility to receive log lines. This option is ignored if log_config_append is set. |

use_stderr = True |

(Boolean) Log output to standard error. This option is ignored if log_config_append is set. |

use_syslog = False |

(Boolean) Use syslog for logging. Existing syslog format is DEPRECATED and will be changed later to honor RFC5424. This option is ignored if log_config_append is set. |

verbose = True |

(Boolean) DEPRECATED: If set to false, the logging level will be set to WARNING instead of the default INFO level. |

watch_log_file = False |

(Boolean) Uses logging handler designed to watch file system. When log file is moved or removed this handler will open a new log file with specified path instantaneously. It makes sense only if log_file option is specified and Linux platform is used. This option is ignored if log_config_append is set. |

Policy configurations¶

The policy configuration options allow the deployer to control where the policy files are located and the default rule to apply when policy.

| Configuration option = Default value | Description |

|---|---|

| [oslo_policy] | |

policy_default_rule = default |

(String) Default rule. Enforced when a requested rule is not found. |

policy_dirs = ['policy.d'] |

(Multi-valued) Directories where policy configuration files are stored. They can be relative to any directory in the search path defined by the config_dir option, or absolute paths. The file defined by policy_file must exist for these directories to be searched. Missing or empty directories are ignored. |

policy_file = policy.json |

(String) The JSON file that defines policies. |

RPC messaging configurations¶

OpenStack services use Advanced Message Queuing Protocol (AMQP), an open standard for messaging middleware. This messaging middleware enables the OpenStack services that run on multiple servers to talk to each other. OpenStack Oslo RPC supports two implementations of AMQP: RabbitMQ and ZeroMQ.

Configure messaging¶

Use these options to configure the RPC messaging driver.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

control_exchange = openstack |

(String) The default exchange under which topics are scoped. May be overridden by an exchange name specified in the transport_url option. |

default_publisher_id = None |

(String) Default publisher_id for outgoing notifications |

transport_url = None |

(String) A URL representing the messaging driver to use and its full configuration. If not set, we fall back to the rpc_backend option and driver specific configuration. |

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

notification_format = both |

(String) Specifies which notification format shall be used by nova. |

rpc_backend = rabbit |

(String) The messaging driver to use, defaults to rabbit. Other drivers include amqp and zmq. |

rpc_cast_timeout = -1 |

(Integer) Seconds to wait before a cast expires (TTL). The default value of -1 specifies an infinite linger period. The value of 0 specifies no linger period. Pending messages shall be discarded immediately when the socket is closed. Only supported by impl_zmq. |

rpc_conn_pool_size = 30 |

(Integer) Size of RPC connection pool. |

rpc_poll_timeout = 1 |

(Integer) The default number of seconds that poll should wait. Poll raises timeout exception when timeout expired. |

rpc_response_timeout = 60 |

(Integer) Seconds to wait for a response from a call. |

| [cells] | |

rpc_driver_queue_base = cells.intercell |

(String) RPC driver queue base When sending a message to another cell by JSON-ifying the message and making an RPC cast to ‘process_message’, a base queue is used. This option defines the base queue name to be used when communicating between cells. Various topics by message type will be appended to this. Possible values: * The base queue name to be used when communicating between cells. Services which consume this: * nova-cells Related options: * None |

| [oslo_concurrency] | |

disable_process_locking = False |

(Boolean) Enables or disables inter-process locks. |

lock_path = None |

(String) Directory to use for lock files. For security, the specified directory should only be writable by the user running the processes that need locking. Defaults to environment variable OSLO_LOCK_PATH. If external locks are used, a lock path must be set. |

| [oslo_messaging] | |

event_stream_topic = neutron_lbaas_event |

(String) topic name for receiving events from a queue |

| [oslo_messaging_amqp] | |

allow_insecure_clients = False |

(Boolean) Accept clients using either SSL or plain TCP |

broadcast_prefix = broadcast |

(String) address prefix used when broadcasting to all servers |

container_name = None |

(String) Name for the AMQP container |

group_request_prefix = unicast |

(String) address prefix when sending to any server in group |

idle_timeout = 0 |

(Integer) Timeout for inactive connections (in seconds) |

password = |

(String) Password for message broker authentication |

sasl_config_dir = |

(String) Path to directory that contains the SASL configuration |

sasl_config_name = |

(String) Name of configuration file (without .conf suffix) |

sasl_mechanisms = |

(String) Space separated list of acceptable SASL mechanisms |

server_request_prefix = exclusive |

(String) address prefix used when sending to a specific server |

ssl_ca_file = |

(String) CA certificate PEM file to verify server certificate |

ssl_cert_file = |

(String) Identifying certificate PEM file to present to clients |

ssl_key_file = |

(String) Private key PEM file used to sign cert_file certificate |

ssl_key_password = None |

(String) Password for decrypting ssl_key_file (if encrypted) |

trace = False |

(Boolean) Debug: dump AMQP frames to stdout |

username = |

(String) User name for message broker authentication |

| [oslo_messaging_notifications] | |

driver = [] |

(Multi-valued) The Drivers(s) to handle sending notifications. Possible values are messaging, messagingv2, routing, log, test, noop |

topics = notifications |

(List) AMQP topic used for OpenStack notifications. |

transport_url = None |

(String) A URL representing the messaging driver to use for notifications. If not set, we fall back to the same configuration used for RPC. |

| [upgrade_levels] | |

baseapi = None |

(String) Set a version cap for messages sent to the base api in any service |

Configure RabbitMQ¶

OpenStack Oslo RPC uses RabbitMQ by default.

The rpc_backend option is not required as long as RabbitMQ

is the default messaging system. However, if it is included

in the configuration, you must set it to rabbit:

rpc_backend = rabbit

You can configure messaging communication for different installation

scenarios, tune retries for RabbitMQ, and define the size of the RPC

thread pool. To monitor notifications through RabbitMQ,

you must set the notification_driver option to

nova.openstack.common.notifier.rpc_notifier.

The default value for sending usage data is sixty seconds plus

a random number of seconds from zero to sixty.

Use the options described in the table below to configure the

RabbitMQ message system.

| Configuration option = Default value | Description |

|---|---|

| [oslo_messaging_rabbit] | |

amqp_auto_delete = False |

(Boolean) Auto-delete queues in AMQP. |

amqp_durable_queues = False |

(Boolean) Use durable queues in AMQP. |

channel_max = None |

(Integer) Maximum number of channels to allow |

default_notification_exchange = ${control_exchange}_notification |

(String) Exchange name for for sending notifications |

default_notification_retry_attempts = -1 |

(Integer) Reconnecting retry count in case of connectivity problem during sending notification, -1 means infinite retry. |

default_rpc_exchange = ${control_exchange}_rpc |

(String) Exchange name for sending RPC messages |

default_rpc_retry_attempts = -1 |

(Integer) Reconnecting retry count in case of connectivity problem during sending RPC message, -1 means infinite retry. If actual retry attempts in not 0 the rpc request could be processed more then one time |

fake_rabbit = False |

(Boolean) Deprecated, use rpc_backend=kombu+memory or rpc_backend=fake |

frame_max = None |

(Integer) The maximum byte size for an AMQP frame |

heartbeat_interval = 1 |

(Integer) How often to send heartbeats for consumer’s connections |

heartbeat_rate = 2 |

(Integer) How often times during the heartbeat_timeout_threshold we check the heartbeat. |

heartbeat_timeout_threshold = 60 |

(Integer) Number of seconds after which the Rabbit broker is considered down if heartbeat’s keep-alive fails (0 disable the heartbeat). EXPERIMENTAL |

host_connection_reconnect_delay = 0.25 |

(Floating point) Set delay for reconnection to some host which has connection error |

kombu_compression = None |

(String) EXPERIMENTAL: Possible values are: gzip, bz2. If not set compression will not be used. This option may notbe available in future versions. |

kombu_failover_strategy = round-robin |

(String) Determines how the next RabbitMQ node is chosen in case the one we are currently connected to becomes unavailable. Takes effect only if more than one RabbitMQ node is provided in config. |

kombu_missing_consumer_retry_timeout = 60 |

(Integer) How long to wait a missing client before abandoning to send it its replies. This value should not be longer than rpc_response_timeout. |

kombu_reconnect_delay = 1.0 |

(Floating point) How long to wait before reconnecting in response to an AMQP consumer cancel notification. |

kombu_ssl_ca_certs = |

(String) SSL certification authority file (valid only if SSL enabled). |

kombu_ssl_certfile = |

(String) SSL cert file (valid only if SSL enabled). |

kombu_ssl_keyfile = |

(String) SSL key file (valid only if SSL enabled). |

kombu_ssl_version = |

(String) SSL version to use (valid only if SSL enabled). Valid values are TLSv1 and SSLv23. SSLv2, SSLv3, TLSv1_1, and TLSv1_2 may be available on some distributions. |

notification_listener_prefetch_count = 100 |

(Integer) Max number of not acknowledged message which RabbitMQ can send to notification listener. |

notification_persistence = False |

(Boolean) Persist notification messages. |

notification_retry_delay = 0.25 |

(Floating point) Reconnecting retry delay in case of connectivity problem during sending notification message |

pool_max_overflow = 0 |

(Integer) Maximum number of connections to create above pool_max_size. |

pool_max_size = 10 |

(Integer) Maximum number of connections to keep queued. |

pool_recycle = 600 |

(Integer) Lifetime of a connection (since creation) in seconds or None for no recycling. Expired connections are closed on acquire. |

pool_stale = 60 |

(Integer) Threshold at which inactive (since release) connections are considered stale in seconds or None for no staleness. Stale connections are closed on acquire. |

pool_timeout = 30 |

(Integer) Default number of seconds to wait for a connections to available |

rabbit_ha_queues = False |

(Boolean) Try to use HA queues in RabbitMQ (x-ha-policy: all). If you change this option, you must wipe the RabbitMQ database. In RabbitMQ 3.0, queue mirroring is no longer controlled by the x-ha-policy argument when declaring a queue. If you just want to make sure that all queues (except those with auto-generated names) are mirrored across all nodes, run: “rabbitmqctl set_policy HA ‘^(?!amq.).*’ ‘{“ha-mode”: “all”}’ “ |

rabbit_host = localhost |

(String) The RabbitMQ broker address where a single node is used. |

rabbit_hosts = $rabbit_host:$rabbit_port |

(List) RabbitMQ HA cluster host:port pairs. |

rabbit_interval_max = 30 |

(Integer) Maximum interval of RabbitMQ connection retries. Default is 30 seconds. |

rabbit_login_method = AMQPLAIN |

(String) The RabbitMQ login method. |

rabbit_max_retries = 0 |

(Integer) Maximum number of RabbitMQ connection retries. Default is 0 (infinite retry count). |

rabbit_password = guest |

(String) The RabbitMQ password. |

rabbit_port = 5672 |

(Port number) The RabbitMQ broker port where a single node is used. |

rabbit_qos_prefetch_count = 0 |

(Integer) Specifies the number of messages to prefetch. Setting to zero allows unlimited messages. |

rabbit_retry_backoff = 2 |

(Integer) How long to backoff for between retries when connecting to RabbitMQ. |

rabbit_retry_interval = 1 |

(Integer) How frequently to retry connecting with RabbitMQ. |

rabbit_transient_queues_ttl = 1800 |

(Integer) Positive integer representing duration in seconds for queue TTL (x-expires). Queues which are unused for the duration of the TTL are automatically deleted. The parameter affects only reply and fanout queues. |

rabbit_use_ssl = False |

(Boolean) Connect over SSL for RabbitMQ. |

rabbit_userid = guest |

(String) The RabbitMQ userid. |

rabbit_virtual_host = / |

(String) The RabbitMQ virtual host. |

rpc_listener_prefetch_count = 100 |

(Integer) Max number of not acknowledged message which RabbitMQ can send to rpc listener. |

rpc_queue_expiration = 60 |

(Integer) Time to live for rpc queues without consumers in seconds. |

rpc_reply_exchange = ${control_exchange}_rpc_reply |

(String) Exchange name for receiving RPC replies |

rpc_reply_listener_prefetch_count = 100 |

(Integer) Max number of not acknowledged message which RabbitMQ can send to rpc reply listener. |

rpc_reply_retry_attempts = -1 |

(Integer) Reconnecting retry count in case of connectivity problem during sending reply. -1 means infinite retry during rpc_timeout |

rpc_reply_retry_delay = 0.25 |

(Floating point) Reconnecting retry delay in case of connectivity problem during sending reply. |

rpc_retry_delay = 0.25 |

(Floating point) Reconnecting retry delay in case of connectivity problem during sending RPC message |

socket_timeout = 0.25 |

(Floating point) Set socket timeout in seconds for connection’s socket |

ssl = None |

(Boolean) Enable SSL |

ssl_options = None |

(Dict) Arguments passed to ssl.wrap_socket |

tcp_user_timeout = 0.25 |

(Floating point) Set TCP_USER_TIMEOUT in seconds for connection’s socket |

Configure ZeroMQ¶

Use these options to configure the ZeroMQ messaging system for OpenStack

Oslo RPC. ZeroMQ is not the default messaging system, so you must enable

it by setting the rpc_backend option.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

rpc_zmq_bind_address = * |

(String) ZeroMQ bind address. Should be a wildcard (*), an ethernet interface, or IP. The “host” option should point or resolve to this address. |

rpc_zmq_bind_port_retries = 100 |

(Integer) Number of retries to find free port number before fail with ZMQBindError. |

rpc_zmq_concurrency = eventlet |

(String) Type of concurrency used. Either “native” or “eventlet” |

rpc_zmq_contexts = 1 |

(Integer) Number of ZeroMQ contexts, defaults to 1. |

rpc_zmq_host = localhost |

(String) Name of this node. Must be a valid hostname, FQDN, or IP address. Must match “host” option, if running Nova. |

rpc_zmq_ipc_dir = /var/run/openstack |

(String) Directory for holding IPC sockets. |

rpc_zmq_matchmaker = redis |

(String) MatchMaker driver. |

rpc_zmq_max_port = 65536 |

(Integer) Maximal port number for random ports range. |

rpc_zmq_min_port = 49152 |

(Port number) Minimal port number for random ports range. |

rpc_zmq_topic_backlog = None |

(Integer) Maximum number of ingress messages to locally buffer per topic. Default is unlimited. |

use_pub_sub = True |

(Boolean) Use PUB/SUB pattern for fanout methods. PUB/SUB always uses proxy. |

zmq_target_expire = 120 |

(Integer) Expiration timeout in seconds of a name service record about existing target ( < 0 means no timeout). |

Cross-origin resource sharing¶

Cross-Origin Resource Sharing (CORS) is a mechanism that allows code running in a browser (JavaScript for example) to make requests to a domain, other than the one it was originated from. OpenStack services support CORS requests.

For more information, see cross-project features in OpenStack Administrator Guide, CORS in Dashboard, and CORS in Object Storage service.

For a complete list of all available CORS configuration options, see CORS configuration options.

Application Catalog service¶

Application Catalog API configuration¶

Configuration options¶

The Application Catalog service can be configured by changing the following options:

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

admin_role = admin |

(String) Role used to identify an authenticated user as administrator. |

max_header_line = 16384 |

(Integer) Maximum line size of message headers to be accepted. max_header_line may need to be increased when using large tokens (typically those generated by the Keystone v3 API with big service catalogs). |

secure_proxy_ssl_header = X-Forwarded-Proto |

(String) The HTTP Header that will be used to determine which the original request protocol scheme was, even if it was removed by an SSL terminator proxy. |

| [oslo_middleware] | |

enable_proxy_headers_parsing = False |

(Boolean) Whether the application is behind a proxy or not. This determines if the middleware should parse the headers or not. |

max_request_body_size = 114688 |

(Integer) The maximum body size for each request, in bytes. |

secure_proxy_ssl_header = X-Forwarded-Proto |

(String) DEPRECATED: The HTTP Header that will be used to determine what the original request protocol scheme was, even if it was hidden by a SSL termination proxy. |

| [oslo_policy] | |

policy_default_rule = default |

(String) Default rule. Enforced when a requested rule is not found. |

policy_dirs = ['policy.d'] |

(Multi-valued) Directories where policy configuration files are stored. They can be relative to any directory in the search path defined by the config_dir option, or absolute paths. The file defined by policy_file must exist for these directories to be searched. Missing or empty directories are ignored. |

policy_file = policy.json |

(String) The JSON file that defines policies. |

| [paste_deploy] | |

config_file = None |

(String) Path to Paste config file |

flavor = None |

(String) Paste flavor |

| Configuration option = Default value | Description |

|---|---|

| [cfapi] | |

auth_url = localhost:5000 |

(String) Authentication URL |

bind_host = localhost |

(String) Host for service broker |

bind_port = 8083 |

(String) Port for service broker |

packages_service = murano |

(String) Package service which should be used by service broker |

project_domain_name = default |

(String) Domain name of the project |

tenant = admin |

(String) Project for service broker |

user_domain_name = default |

(String) Domain name of the user |

Additional configuration options for Application Catalog service¶

These options can also be set in the murano.conf file.

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

backlog = 4096 |

(Integer) Number of backlog requests to configure the socket with |

bind_host = 0.0.0.0 |

(String) Address to bind the Murano API server to. |

bind_port = 8082 |

(Port number) Port the bind the Murano API server to. |

executor_thread_pool_size = 64 |

(Integer) Size of executor thread pool. |

file_server = |

(String) Set a file server. |

home_region = None |

(String) Default region name used to get services endpoints. |

metadata_dir = ./meta |

(String) Metadata dir |

publish_errors = False |

(Boolean) Enables or disables publication of error events. |

tcp_keepidle = 600 |

(Integer) Sets the value of TCP_KEEPIDLE in seconds for each server socket. Not supported on OS X. |

use_router_proxy = True |

(Boolean) Use ROUTER remote proxy. |

| [murano] | |

api_limit_max = 100 |

(Integer) Maximum number of packages to be returned in a single pagination request |

api_workers = None |

(Integer) Number of API workers |

cacert = None |

(String) (SSL) Tells Murano to use the specified client certificate file when communicating with Murano API used by Murano engine. |

cert_file = None |

(String) (SSL) Tells Murano to use the specified client certificate file when communicating with Murano used by Murano engine. |

enabled_plugins = None |

(List) List of enabled Extension Plugins. Remove or leave commented to enable all installed plugins. |

endpoint_type = publicURL |

(String) Murano endpoint type used by Murano engine. |

insecure = False |

(Boolean) This option explicitly allows Murano to perform “insecure” SSL connections and transfers used by Murano engine. |

key_file = None |

(String) (SSL/SSH) Private key file name to communicate with Murano API used by Murano engine. |

limit_param_default = 20 |

(Integer) Default value for package pagination in API. |

package_size_limit = 5 |

(Integer) Maximum application package size, Mb |

url = None |

(String) Optional murano url in format like http://0.0.0.0:8082 used by Murano engine |

| [stats] | |

period = 5 |

(Integer) Statistics collection interval in minutes.Default value is 5 minutes. |

| Configuration option = Default value | Description |

|---|---|

| [engine] | |

agent_timeout = 3600 |

(Integer) Time for waiting for a response from murano agent during the deployment |

class_configs = /etc/murano/class-configs |

(String) Path to class configuration files |

disable_murano_agent = False |

(Boolean) Disallow the use of murano-agent |

enable_model_policy_enforcer = False |

(Boolean) Enable model policy enforcer using Congress |

enable_packages_cache = True |

(Boolean) Enables murano-engine to persist on disk packages downloaded during deployments. The packages would be re-used for consequent deployments. |

engine_workers = None |

(Integer) Number of engine workers |

load_packages_from = |

(List) List of directories to load local packages from. If not provided, packages will be loaded only API |

packages_cache = None |

(String) Location (directory) for Murano package cache. |

packages_service = murano |

(String) The service to store murano packages: murano (stands for legacy behavior using murano-api) or glance (stands for glance-glare artifact service) |

use_trusts = True |

(Boolean) Create resources using trust token rather than user’s token |

| Configuration option = Default value | Description |

|---|---|

| [glare] | |

ca_file = None |

(String) (SSL) Tells Murano to use the specified certificate file to verify the peer running Glare API. |

cert_file = None |

(String) (SSL) Tells Murano to use the specified client certificate file when communicating with Glare. |

endpoint_type = publicURL |

(String) Glare endpoint type. |

insecure = False |

(Boolean) This option explicitly allows Murano to perform “insecure” SSL connections and transfers with Glare API. |

key_file = None |

(String) (SSL/SSH) Private key file name to communicate with Glare API. |

url = None |

(String) Optional glare url in format like http://0.0.0.0:9494 used by Glare API |

| Configuration option = Default value | Description |

|---|---|

| [heat] | |

ca_file = None |

(String) (SSL) Tells Murano to use the specified certificate file to verify the peer running Heat API. |

cert_file = None |

(String) (SSL) Tells Murano to use the specified client certificate file when communicating with Heat. |

endpoint_type = publicURL |

(String) Heat endpoint type. |

insecure = False |

(Boolean) This option explicitly allows Murano to perform “insecure” SSL connections and transfers with Heat API. |

key_file = None |

(String) (SSL/SSH) Private key file name to communicate with Heat API. |

stack_tags = murano |

(List) List of tags to be assigned to heat stacks created during environment deployment. |

url = None |

(String) Optional heat endpoint override |

| Configuration option = Default value | Description |

|---|---|

| [mistral] | |

ca_cert = None |

(String) (SSL) Tells Murano to use the specified client certificate file when communicating with Mistral. |

endpoint_type = publicURL |

(String) Mistral endpoint type. |

insecure = False |

(Boolean) This option explicitly allows Murano to perform “insecure” SSL connections and transfers with Mistral. |

service_type = workflowv2 |

(String) Mistral service type. |

url = None |

(String) Optional mistral endpoint override |

| Configuration option = Default value | Description |

|---|---|

| [networking] | |

create_router = True |

(Boolean) This option will create a router when one with “router_name” does not exist |

default_dns = |

(List) List of default DNS nameservers to be assigned to created Networks |

driver = None |

(String) Network driver to use. Options are neutron or nova.If not provided, the driver will be detected. |

env_ip_template = 10.0.0.0 |

(String) Template IP address for generating environment subnet cidrs |

external_network = ext-net |

(String) ID or name of the external network for routers to connect to |

max_environments = 250 |

(Integer) Maximum number of environments that use a single router per tenant |

max_hosts = 250 |

(Integer) Maximum number of VMs per environment |

network_config_file = netconfig.yaml |

(String) If provided networking configuration will be taken from this file |

router_name = murano-default-router |

(String) Name of the router that going to be used in order to join all networks created by Murano |

| [neutron] | |

ca_cert = None |

(String) (SSL) Tells Murano to use the specified client certificate file when communicating with Neutron. |

endpoint_type = publicURL |

(String) Neutron endpoint type. |

insecure = False |

(Boolean) This option explicitly allows Murano to perform “insecure” SSL connections and transfers with Neutron API. |

url = None |

(String) Optional neutron endpoint override |

| Configuration option = Default value | Description |

|---|---|

| [matchmaker_redis] | |

check_timeout = 20000 |

(Integer) Time in ms to wait before the transaction is killed. |

host = 127.0.0.1 |

(String) DEPRECATED: Host to locate redis. Replaced by [DEFAULT]/transport_url |

password = |

(String) DEPRECATED: Password for Redis server (optional). Replaced by [DEFAULT]/transport_url |

port = 6379 |

(Port number) DEPRECATED: Use this port to connect to redis host. Replaced by [DEFAULT]/transport_url |

sentinel_group_name = oslo-messaging-zeromq |

(String) Redis replica set name. |

sentinel_hosts = |

(List) DEPRECATED: List of Redis Sentinel hosts (fault tolerance mode) e.g. [host:port, host1:port ... ] Replaced by [DEFAULT]/transport_url |

socket_timeout = 10000 |

(Integer) Timeout in ms on blocking socket operations |

wait_timeout = 2000 |

(Integer) Time in ms to wait between connection attempts. |

New, updated, and deprecated options in Newton for Application Catalog service¶

| Option = default value | (Type) Help string |

|---|---|

[cfapi] packages_service = murano |

(StrOpt) Package service which should be used by service broker |

[engine] engine_workers = None |

(IntOpt) Number of engine workers |

[murano] api_workers = None |

(IntOpt) Number of API workers |

[networking] driver = None |

(StrOpt) Network driver to use. Options are neutron or nova.If not provided, the driver will be detected. |

| Deprecated option | New Option |

|---|---|

[DEFAULT] use_syslog |

None |

[engine] workers |

[engine] engine_workers |

This chapter describes the Application Catalog service configuration options.

Note

The common configurations for shared services and libraries, such as database connections and RPC messaging, are described at Common configurations.

Bare Metal service¶

Bare Metal API configuration¶

Configuration options¶

The following options allow configuration of the APIs that Bare Metal service supports.

| Configuration option = Default value | Description |

|---|---|

| [api] | |

api_workers = None |

(Integer) Number of workers for OpenStack Ironic API service. The default is equal to the number of CPUs available if that can be determined, else a default worker count of 1 is returned. |

enable_ssl_api = False |

(Boolean) Enable the integrated stand-alone API to service requests via HTTPS instead of HTTP. If there is a front-end service performing HTTPS offloading from the service, this option should be False; note, you will want to change public API endpoint to represent SSL termination URL with ‘public_endpoint’ option. |

host_ip = 0.0.0.0 |

(String) The IP address on which ironic-api listens. |

max_limit = 1000 |

(Integer) The maximum number of items returned in a single response from a collection resource. |

port = 6385 |

(Port number) The TCP port on which ironic-api listens. |

public_endpoint = None |

(String) Public URL to use when building the links to the API resources (for example, “https://ironic.rocks:6384”). If None the links will be built using the request’s host URL. If the API is operating behind a proxy, you will want to change this to represent the proxy’s URL. Defaults to None. |

ramdisk_heartbeat_timeout = 300 |

(Integer) Maximum interval (in seconds) for agent heartbeats. |

restrict_lookup = True |

(Boolean) Whether to restrict the lookup API to only nodes in certain states. |

| [oslo_middleware] | |

enable_proxy_headers_parsing = False |

(Boolean) Whether the application is behind a proxy or not. This determines if the middleware should parse the headers or not. |

max_request_body_size = 114688 |

(Integer) The maximum body size for each request, in bytes. |

secure_proxy_ssl_header = X-Forwarded-Proto |

(String) DEPRECATED: The HTTP Header that will be used to determine what the original request protocol scheme was, even if it was hidden by a SSL termination proxy. |

| [oslo_versionedobjects] | |

fatal_exception_format_errors = False |

(Boolean) Make exception message format errors fatal |

Additional configuration options for Bare Metal service¶

The following tables provide a comprehensive list of the Bare Metal service configuration options.

| Configuration option = Default value | Description |

|---|---|

| [agent] | |

agent_api_version = v1 |

(String) API version to use for communicating with the ramdisk agent. |

deploy_logs_collect = on_failure |

(String) Whether Ironic should collect the deployment logs on deployment failure (on_failure), always or never. |

deploy_logs_local_path = /var/log/ironic/deploy |

(String) The path to the directory where the logs should be stored, used when the deploy_logs_storage_backend is configured to “local”. |

deploy_logs_storage_backend = local |

(String) The name of the storage backend where the logs will be stored. |

deploy_logs_swift_container = ironic_deploy_logs_container |

(String) The name of the Swift container to store the logs, used when the deploy_logs_storage_backend is configured to “swift”. |

deploy_logs_swift_days_to_expire = 30 |

(Integer) Number of days before a log object is marked as expired in Swift. If None, the logs will be kept forever or until manually deleted. Used when the deploy_logs_storage_backend is configured to “swift”. |

manage_agent_boot = True |

(Boolean) Whether Ironic will manage booting of the agent ramdisk. If set to False, you will need to configure your mechanism to allow booting the agent ramdisk. |

memory_consumed_by_agent = 0 |

(Integer) The memory size in MiB consumed by agent when it is booted on a bare metal node. This is used for checking if the image can be downloaded and deployed on the bare metal node after booting agent ramdisk. This may be set according to the memory consumed by the agent ramdisk image. |

post_deploy_get_power_state_retries = 6 |

(Integer) Number of times to retry getting power state to check if bare metal node has been powered off after a soft power off. |

post_deploy_get_power_state_retry_interval = 5 |

(Integer) Amount of time (in seconds) to wait between polling power state after trigger soft poweroff. |

stream_raw_images = True |

(Boolean) Whether the agent ramdisk should stream raw images directly onto the disk or not. By streaming raw images directly onto the disk the agent ramdisk will not spend time copying the image to a tmpfs partition (therefore consuming less memory) prior to writing it to the disk. Unless the disk where the image will be copied to is really slow, this option should be set to True. Defaults to True. |

| Configuration option = Default value | Description |

|---|---|

| [amt] | |

action_wait = 10 |

(Integer) Amount of time (in seconds) to wait, before retrying an AMT operation |

awake_interval = 60 |

(Integer) Time interval (in seconds) for successive awake call to AMT interface, this depends on the IdleTimeout setting on AMT interface. AMT Interface will go to sleep after 60 seconds of inactivity by default. IdleTimeout=0 means AMT will not go to sleep at all. Setting awake_interval=0 will disable awake call. |

max_attempts = 3 |

(Integer) Maximum number of times to attempt an AMT operation, before failing |

protocol = http |

(String) Protocol used for AMT endpoint |

| Configuration option = Default value | Description |

|---|---|

| [audit] | |

audit_map_file = /etc/ironic/ironic_api_audit_map.conf |

(String) Path to audit map file for ironic-api service. Used only when API audit is enabled. |

enabled = False |

(Boolean) Enable auditing of API requests (for ironic-api service). |

ignore_req_list = None |

(String) Comma separated list of Ironic REST API HTTP methods to be ignored during audit. For example: auditing will not be done on any GET or POST requests if this is set to “GET,POST”. It is used only when API audit is enabled. |

namespace = openstack |

(String) namespace prefix for generated id |

| [audit_middleware_notifications] | |

driver = None |

(String) The Driver to handle sending notifications. Possible values are messaging, messagingv2, routing, log, test, noop. If not specified, then value from oslo_messaging_notifications conf section is used. |

topics = None |

(List) List of AMQP topics used for OpenStack notifications. If not specified, then value from oslo_messaging_notifications conf section is used. |

transport_url = None |

(String) A URL representing messaging driver to use for notification. If not specified, we fall back to the same configuration used for RPC. |

| Configuration option = Default value | Description |

|---|---|

| [cimc] | |

action_interval = 10 |

(Integer) Amount of time in seconds to wait in between power operations |

max_retry = 6 |

(Integer) Number of times a power operation needs to be retried |

| [cisco_ucs] | |

action_interval = 5 |

(Integer) Amount of time in seconds to wait in between power operations |

max_retry = 6 |

(Integer) Number of times a power operation needs to be retried |

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

bindir = /usr/local/bin |

(String) Directory where ironic binaries are installed. |

debug_tracebacks_in_api = False |

(Boolean) Return server tracebacks in the API response for any error responses. WARNING: this is insecure and should not be used in a production environment. |

default_network_interface = None |

(String) Default network interface to be used for nodes that do not have network_interface field set. A complete list of network interfaces present on your system may be found by enumerating the “ironic.hardware.interfaces.network” entrypoint. |

enabled_drivers = pxe_ipmitool |

(List) Specify the list of drivers to load during service initialization. Missing drivers, or drivers which fail to initialize, will prevent the conductor service from starting. The option default is a recommended set of production-oriented drivers. A complete list of drivers present on your system may be found by enumerating the “ironic.drivers” entrypoint. An example may be found in the developer documentation online. |

enabled_network_interfaces = flat, noop |

(List) Specify the list of network interfaces to load during service initialization. Missing network interfaces, or network interfaces which fail to initialize, will prevent the conductor service from starting. The option default is a recommended set of production-oriented network interfaces. A complete list of network interfaces present on your system may be found by enumerating the “ironic.hardware.interfaces.network” entrypoint. This value must be the same on all ironic-conductor and ironic-api services, because it is used by ironic-api service to validate a new or updated node’s network_interface value. |

executor_thread_pool_size = 64 |

(Integer) Size of executor thread pool. |

fatal_exception_format_errors = False |

(Boolean) Used if there is a formatting error when generating an exception message (a programming error). If True, raise an exception; if False, use the unformatted message. |

force_raw_images = True |

(Boolean) If True, convert backing images to “raw” disk image format. |

grub_config_template = $pybasedir/common/grub_conf.template |

(String) Template file for grub configuration file. |

hash_distribution_replicas = 1 |

(Integer) [Experimental Feature] Number of hosts to map onto each hash partition. Setting this to more than one will cause additional conductor services to prepare deployment environments and potentially allow the Ironic cluster to recover more quickly if a conductor instance is terminated. |

hash_partition_exponent = 5 |

(Integer) Exponent to determine number of hash partitions to use when distributing load across conductors. Larger values will result in more even distribution of load and less load when rebalancing the ring, but more memory usage. Number of partitions per conductor is (2^hash_partition_exponent). This determines the granularity of rebalancing: given 10 hosts, and an exponent of the 2, there are 40 partitions in the ring.A few thousand partitions should make rebalancing smooth in most cases. The default is suitable for up to a few hundred conductors. Too many partitions has a CPU impact. |

hash_ring_reset_interval = 180 |

(Integer) Interval (in seconds) between hash ring resets. |

host = localhost |

(String) Name of this node. This can be an opaque identifier. It is not necessarily a hostname, FQDN, or IP address. However, the node name must be valid within an AMQP key, and if using ZeroMQ, a valid hostname, FQDN, or IP address. |

isolinux_bin = /usr/lib/syslinux/isolinux.bin |

(String) Path to isolinux binary file. |

isolinux_config_template = $pybasedir/common/isolinux_config.template |

(String) Template file for isolinux configuration file. |

my_ip = 127.0.0.1 |

(String) IP address of this host. If unset, will determine the IP programmatically. If unable to do so, will use “127.0.0.1”. |

notification_level = None |

(String) Specifies the minimum level for which to send notifications. If not set, no notifications will be sent. The default is for this option to be unset. |

parallel_image_downloads = False |

(Boolean) Run image downloads and raw format conversions in parallel. |

pybasedir = /usr/lib/python/site-packages/ironic/ironic |

(String) Directory where the ironic python module is installed. |

rootwrap_config = /etc/ironic/rootwrap.conf |

(String) Path to the rootwrap configuration file to use for running commands as root. |

state_path = $pybasedir |

(String) Top-level directory for maintaining ironic’s state. |

tempdir = /tmp |

(String) Temporary working directory, default is Python temp dir. |

| [ironic_lib] | |

fatal_exception_format_errors = False |

(Boolean) Make exception message format errors fatal. |

root_helper = sudo ironic-rootwrap /etc/ironic/rootwrap.conf |

(String) Command that is prefixed to commands that are run as root. If not specified, no commands are run as root. |

| Configuration option = Default value | Description |

|---|---|

| [conductor] | |

api_url = None |

(String) URL of Ironic API service. If not set ironic can get the current value from the keystone service catalog. |

automated_clean = True |

(Boolean) Enables or disables automated cleaning. Automated cleaning is a configurable set of steps, such as erasing disk drives, that are performed on the node to ensure it is in a baseline state and ready to be deployed to. This is done after instance deletion as well as during the transition from a “manageable” to “available” state. When enabled, the particular steps performed to clean a node depend on which driver that node is managed by; see the individual driver’s documentation for details. NOTE: The introduction of the cleaning operation causes instance deletion to take significantly longer. In an environment where all tenants are trusted (eg, because there is only one tenant), this option could be safely disabled. |

check_provision_state_interval = 60 |

(Integer) Interval between checks of provision timeouts, in seconds. |

clean_callback_timeout = 1800 |

(Integer) Timeout (seconds) to wait for a callback from the ramdisk doing the cleaning. If the timeout is reached the node will be put in the “clean failed” provision state. Set to 0 to disable timeout. |

configdrive_swift_container = ironic_configdrive_container |

(String) Name of the Swift container to store config drive data. Used when configdrive_use_swift is True. |

configdrive_use_swift = False |

(Boolean) Whether to upload the config drive to Swift. |

deploy_callback_timeout = 1800 |

(Integer) Timeout (seconds) to wait for a callback from a deploy ramdisk. Set to 0 to disable timeout. |

force_power_state_during_sync = True |

(Boolean) During sync_power_state, should the hardware power state be set to the state recorded in the database (True) or should the database be updated based on the hardware state (False). |

heartbeat_interval = 10 |

(Integer) Seconds between conductor heart beats. |

heartbeat_timeout = 60 |

(Integer) Maximum time (in seconds) since the last check-in of a conductor. A conductor is considered inactive when this time has been exceeded. |

inspect_timeout = 1800 |

(Integer) Timeout (seconds) for waiting for node inspection. 0 - unlimited. |

node_locked_retry_attempts = 3 |

(Integer) Number of attempts to grab a node lock. |

node_locked_retry_interval = 1 |

(Integer) Seconds to sleep between node lock attempts. |

periodic_max_workers = 8 |

(Integer) Maximum number of worker threads that can be started simultaneously by a periodic task. Should be less than RPC thread pool size. |

power_state_sync_max_retries = 3 |

(Integer) During sync_power_state failures, limit the number of times Ironic should try syncing the hardware node power state with the node power state in DB |

send_sensor_data = False |

(Boolean) Enable sending sensor data message via the notification bus |

send_sensor_data_interval = 600 |

(Integer) Seconds between conductor sending sensor data message to ceilometer via the notification bus. |

send_sensor_data_types = ALL |

(List) List of comma separated meter types which need to be sent to Ceilometer. The default value, “ALL”, is a special value meaning send all the sensor data. |

sync_local_state_interval = 180 |

(Integer) When conductors join or leave the cluster, existing conductors may need to update any persistent local state as nodes are moved around the cluster. This option controls how often, in seconds, each conductor will check for nodes that it should “take over”. Set it to a negative value to disable the check entirely. |

sync_power_state_interval = 60 |

(Integer) Interval between syncing the node power state to the database, in seconds. |

workers_pool_size = 100 |

(Integer) The size of the workers greenthread pool. Note that 2 threads will be reserved by the conductor itself for handling heart beats and periodic tasks. |

| Configuration option = Default value | Description |

|---|---|

| [console] | |

subprocess_checking_interval = 1 |

(Integer) Time interval (in seconds) for checking the status of console subprocess. |

subprocess_timeout = 10 |

(Integer) Time (in seconds) to wait for the console subprocess to start. |

terminal = shellinaboxd |

(String) Path to serial console terminal program. Used only by Shell In A Box console. |

terminal_cert_dir = None |

(String) Directory containing the terminal SSL cert (PEM) for serial console access. Used only by Shell In A Box console. |

terminal_pid_dir = None |

(String) Directory for holding terminal pid files. If not specified, the temporary directory will be used. |

| Configuration option = Default value | Description |

|---|---|

| [drac] | |

query_raid_config_job_status_interval = 120 |

(Integer) Interval (in seconds) between periodic RAID job status checks to determine whether the asynchronous RAID configuration was successfully finished or not. |

| Configuration option = Default value | Description |

|---|---|

| [DEFAULT] | |

pecan_debug = False |

(Boolean) Enable pecan debug mode. WARNING: this is insecure and should not be used in a production environment. |

| Configuration option = Default value | Description |

|---|---|

| [deploy] | |

continue_if_disk_secure_erase_fails = False |

(Boolean) Defines what to do if an ATA secure erase operation fails during cleaning in the Ironic Python Agent. If False, the cleaning operation will fail and the node will be put in clean failed state. If True, shred will be invoked and cleaning will continue. |

erase_devices_metadata_priority = None |

(Integer) Priority to run in-band clean step that erases metadata from devices, via the Ironic Python Agent ramdisk. If unset, will use the priority set in the ramdisk (defaults to 99 for the GenericHardwareManager). If set to 0, will not run during cleaning. |

erase_devices_priority = None |

(Integer) Priority to run in-band erase devices via the Ironic Python Agent ramdisk. If unset, will use the priority set in the ramdisk (defaults to 10 for the GenericHardwareManager). If set to 0, will not run during cleaning. |

http_root = /httpboot |

(String) ironic-conductor node’s HTTP root path. |

http_url = None |

(String) ironic-conductor node’s HTTP server URL. Example: http://192.1.2.3:8080 |

power_off_after_deploy_failure = True |

(Boolean) Whether to power off a node after deploy failure. Defaults to True. |

shred_final_overwrite_with_zeros = True |

(Boolean) Whether to write zeros to a node’s block devices after writing random data. This will write zeros to the device even when deploy.shred_random_overwrite_iterations is 0. This option is only used if a device could not be ATA Secure Erased. Defaults to True. |

shred_random_overwrite_iterations = 1 |

(Integer) During shred, overwrite all block devices N times with random data. This is only used if a device could not be ATA Secure Erased. Defaults to 1. |

| Configuration option = Default value | Description |

|---|---|

| [dhcp] | |

dhcp_provider = neutron |

(String) DHCP provider to use. “neutron” uses Neutron, and “none” uses a no-op provider. |

| Configuration option = Default value | Description |

|---|---|

| [disk_partitioner] | |

check_device_interval = 1 |

(Integer) After Ironic has completed creating the partition table, it continues to check for activity on the attached iSCSI device status at this interval prior to copying the image to the node, in seconds |

check_device_max_retries = 20 |

(Integer) The maximum number of times to check that the device is not accessed by another process. If the device is still busy after that, the disk partitioning will be treated as having failed. |

| [disk_utils] | |

bios_boot_partition_size = 1 |

(Integer) Size of BIOS Boot partition in MiB when configuring GPT partitioned systems for local boot in BIOS. |

dd_block_size = 1M |

(String) Block size to use when writing to the nodes disk. |

efi_system_partition_size = 200 |

(Integer) Size of EFI system partition in MiB when configuring UEFI systems for local boot. |

iscsi_verify_attempts = 3 |

(Integer) Maximum attempts to verify an iSCSI connection is active, sleeping 1 second between attempts. |

| Configuration option = Default value | Description |

|---|---|

| [glance] | |

allowed_direct_url_schemes = |

(List) A list of URL schemes that can be downloaded directly via the direct_url. Currently supported schemes: [file]. |

auth_section = None |

(Unknown) Config Section from which to load plugin specific options |

auth_strategy = keystone |